We're going into our new partnership with MTurk Suite by taking a deep dive review of our site, processes, and how we're serving the worker community.

It's been over two years since TurkerView launched, and in that time we've seen amazing progress around fair wage practices. We're blessed with a strong community of workers, and with their expertise on the platform we want to share what we've learned and share insight on how we can approach payments for crowd workers moving forward.

Measuring Progress

We selected users who submitted data to TurkerView during our first few months of operation and who have continued to interact with the platform through 2019 in order to create our core userbase. Their data is at the forefront of understanding how wages are impacted by down time, wasted effort, task searches, and tax obligations. They are the driving force behind our efforts, and we examined their earnings on the platform in order to get a more controlled overview of wage trends.

In Task Earnings for Core users, 2018-2019 (all tasks)

Overall these users have seen a 30% total increase in their average task wage over the last 2 years. That's fantastic progress but it's important to remember these users generally operate in the higher efficiency spectrum of workers and this doesn't cover time spent in between tasks.

Wage Sentiment Analysis

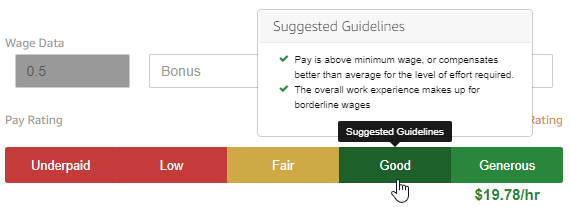

As we've seen wages increase across our core userbase we've also noticed a shift in their expectations for payment. On TurkerView users are asked to rate how they felt about the payment of a task they're reviewing and given a basic guideline on suggested ranges—and despite not updating that guideline since its initial launch, workers have begun proactively shifting their expectations and relaying them to fellow workers.

Average Wage Sentiment, 2018-2019 (tasks paying $7.25/hr-$10.50/hr)

For tasks existing in the same pay range worker sentiment on those tasks has dropped almost 11%. Across time, and as their own wages increase, workers are rating tasks with stagnant wages as no longer acceptable.

These shifts match across most of our currently proposed ratings guidelines which are displayed to workers every time they leave a review on our website. It's great to see workers continuing to value their own work and time, and we want to facilitate that by updating our services to reflect those expectations.

What We're Doing

Sentiment Ratings

Continuing to use historical sentiment ratings makes it difficult to convey our user's current feelings. While helpful in the moment perspective changes over time and we're seeing a lot of indications the sentiment ratings aren't doing a great job of projecting accurate information. In response to this we'll be removing them from the web platform and turning them into an internal metric for workers only (similar to our return review system).

Workers won't see any adverse effects to their current tools, and our API will continue to return these ratings to them for evaluation in their workflow if desired, but these changes will allow us to offer time based metrics on requester accounts so past payment practices carry less weight.

Wage Guidelines

As we push for the fair payment for all workers it's important to set new standards on what is deemed "fair". The tables below represent our plan for keeping our platform up to date, and in the service of workers, in 2020. We've used feedback from workers (and a special thanks to Mark Whiting for publishing his team's data on this topic) to settle on ranges that more accurately reflect their associated sentiment tier. We plan to update our guidelines quarterly, and to reflect the tax burdens self employed individuals face (which apply to all crowdworkers on MTurk and similar platforms).

2020 (Q3) Wage Guidelines (reviewed quarterly)

| Rating | Wage Range |

|---|---|

| Underpaid | Less than Federal Minimum Wage (+15% SE tax) |

| Low | Less than the highest US State Minimum Wage (+15% SE tax) |

| Fair | 100% - 125% rate of highest US State Minimum Wage (+15% SE tax) |

| Good | 125% - 150% rate of highest US State Minimum Wage (+15% SE tax) |

| Generous | 150% rate of highest US State min-wage or higher (+15% SE tax) |

We understand the budgetary strains increasing our guidelines can place on requesters, but when used properly MTurk is a source of high quality, convenient participant samples. We want to move in a direction that shares the value the platform provides with the people actually completing work. We are always available to mediate discussions with funding sources or IRBs if researchers need help conveying the importance of paying fair wages.

These guidelines will be applied to tasks posted in Q3 2020 and onward to give requesters a fair amount of time to adjust. As it would be unfair to retroactively apply them to researchers who used our original guidelines for pricing we'll be splitting historical wage & sentiment statistics from the old guidelines for display on our web app. This means only tasks posted after July 1st will be measured under the new guidelines.

We spent $600K on a national probability sample. And $17K on a concurrent MTurk sample. Same findings in 21 of 24 experiments. https://t.co/OTod4v59cK

— Noel Brewer (@noelTbrewer) April 16, 2019

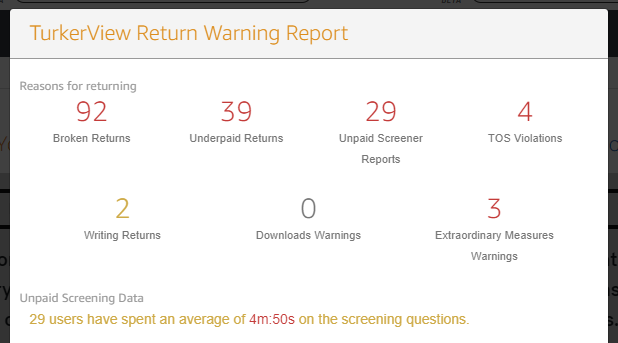

Representing Fairness - Return Reviews

In response to the removal of our sentiment ratings from the web platform we'll be replacing them with something we feel more accurately measures the rating's purpose of understanding if a requester is treating workers fairly.

Currently our users have access to an internal review system for "bad" tasks that allows them to quickly alert other workers that a task violated a set of worker generated guidelines (including low payment, unfair screening practices, broken tasks) that generally lend themselves to quicker, less permanent submissions.

This data is rather transient in the current system as the reports are only tied to individual tasks. It's difficult for users to tell if a requester has a history of problems using this feature, so we'll be bringing the aggregate statistics from these reviews onto the web platform for more long form worker review. We'll only display filtered aggregate data, so workers can continue to feel secure in sharing their experiences, but we hope this information will add some nuance to requester profiles that don't always paint a full picture. We hope they'll also give insights for requesters to see where their task design may be falling short in helping workers provide quality data.

We're Making Progress

We cannot express enough gratitude to the hundreds of researchers, businesses, and workers who engage in open discussion about online crowd work. The positive trends we've seen over the last two years are impressive.

We'll be adding extra emphasis around our public facing applications to ensure attention is brought towards what constitutes generous payments in order to help facilitate these discussions, and will continue to pop in on social media to share these thoughts as well!

For workers, we're excited to bring these changes to our platform and, as always, look forward to feedback from the broader MTurk community.